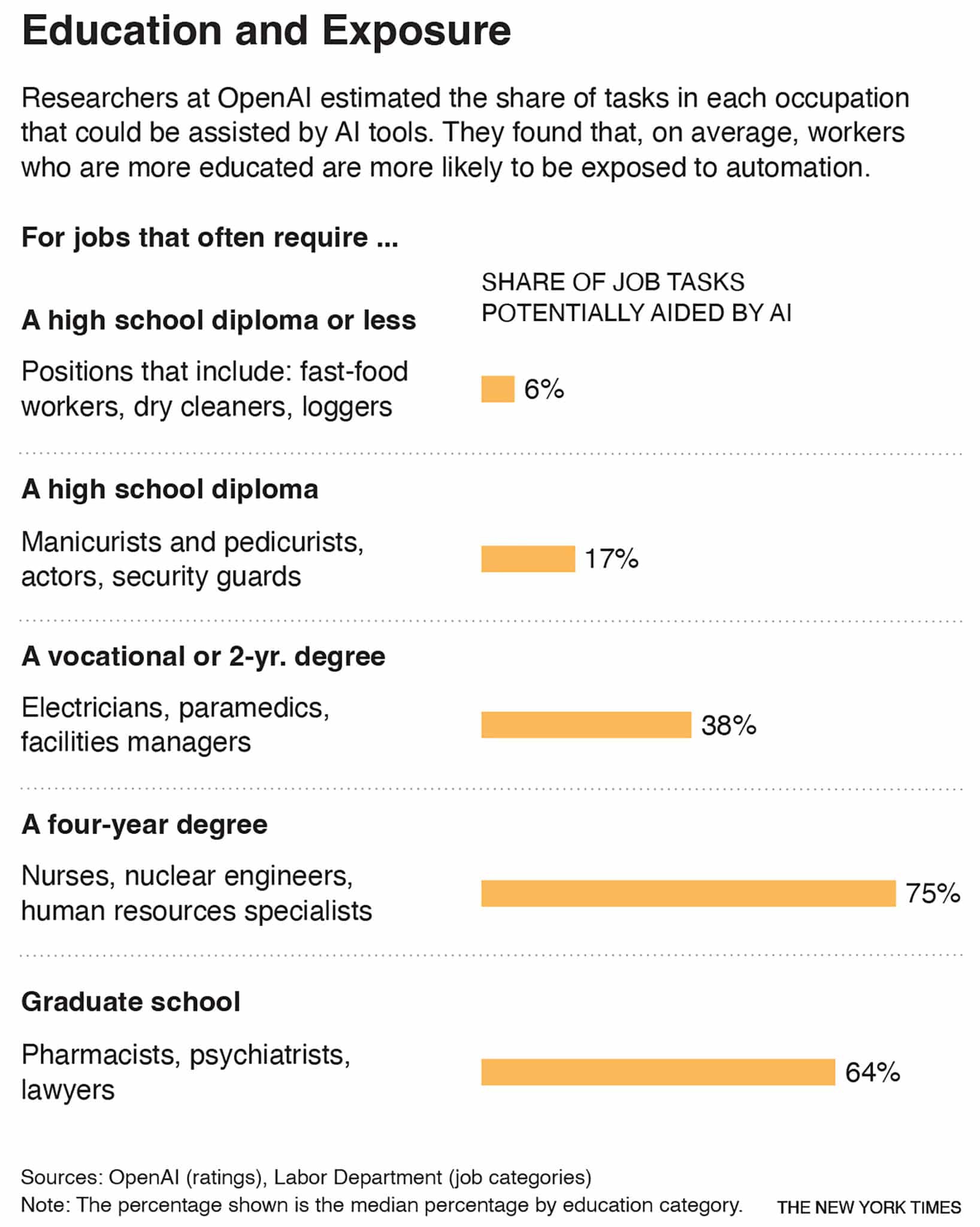

The American workers who have had their careers upended by automation in recent decades have largely been less educated, especially men working in manufacturing.

But the new kind of automation — artificial intelligence systems called large language models, like ChatGPT and Google’s Bard — is changing that. These tools can rapidly process and synthesize information and generate new content. The jobs most exposed to automation now are office jobs — those that require more cognitive skills, creativity and high levels of education. The workers affected are likelier to be highly paid and slightly likelier to be women, a variety of research has found.

“It’s surprised most people, including me,” said Erik Brynjolfsson, a professor at the Stanford Institute for Human-Centered AI, who had predicted that creativity and tech skills would insulate people from the effects of automation. “To be brutally honest, we had a hierarchy of things that technology could do, and we felt comfortable saying things like creative work, professional work, emotional intelligence would be hard for machines to ever do. Now that’s all been upended.”

A range of new research has analyzed the tasks of American workers, using the Labor Department’s O*Net database, and hypothesized which of them large language models could do. It has found these models could significantly help with tasks in one-fifth to one-quarter of occupations. In a majority of jobs, the models could do some of the tasks, found the analyses, including from Pew Research Center and Goldman Sachs.

For now, the models still sometimes produce incorrect information and are more likely to assist workers than replace them, said Pamela Mishkin and Tyna Eloundou, researchers at OpenAI, the company and research lab behind ChatGPT. They did a similar study, analyzing the 19,265 tasks done in 923 occupations, and found that large language models could do some of the tasks that 80% of American workers do.

Yet they also found reason for some workers to fear that large language models could displace them, in line with what Sam Altman, OpenAI’s CEO, told The Atlantic last month: “Jobs are definitely going to go away, full stop.”

The researchers asked an advanced model of ChatGPT to analyze the O*Net data and determine which tasks large language models could do. It found that 86 jobs were entirely exposed (meaning every task could be assisted by the tool). The human researchers said 15 jobs were. The job that both the humans and the AI agreed was most exposed was mathematician.

Just 4% of jobs had zero tasks that could be assisted by the technology, the analysis found. They included athletes, dishwashers and those assisting carpenters, roofers or painters. Yet even tradespeople could use AI for parts of their jobs, like scheduling, customer service and route optimization, said Mike Bidwell, CEO of Neighborly, a home services company.

While OpenAI has a business interest in promoting its technology as a boon to workers, other researchers said there were still uniquely human capabilities that were not (yet) able to be automated — like social skills, teamwork, care work and the skills of tradespeople. “We’re not going to run out of things for humans to do anytime soon,” Brynjolfsson said. “But the things are different: learning how to ask the right questions, really interacting with people, physical work requiring dexterity.”

For now, large language models will probably help many workers be more productive in their existing jobs, researchers say, akin to giving office workers, even entry-level ones, a chief of staff or a research assistant (though that could signal trouble for human assistants).

Take writing code: A study of Github’s Copilot, an AI program that helps programmers by suggesting code and functions, found that those using it were 56% faster than those doing the same task without it.

“There’s a misconception that exposure is necessarily a bad thing,” Mishkin said. After reading descriptions of every occupation for the study, she and her colleagues learned “an important lesson,” she said: “There’s no way a model is ever going to do all of this.”

Large language models could help write legislation, for instance, but could not pass laws. They could act as therapists — people could share their thoughts, and the models could respond with ideas based on proven regimens — but they do not have human empathy or the ability to read nuanced situations.

The version of ChatGPT open to the public has risks for workers: It often gets things wrong, can reflect human biases and is not secure enough for businesses to trust with confidential information. Companies that use it get around these obstacles with tools that tap its technology in a so-called closed domain — meaning they train the model only on certain content and keep any inputs private.

Morgan Stanley and AI

Morgan Stanley uses a version of OpenAI’s model made for its business that was fed about 100,000 internal documents, more than 1 million pages. Financial advisers use it to help them find information to answer client questions quickly, like whether to invest in a certain company. (Previously, this required finding and reading multiple reports.)

It leaves advisers more time to talk with clients, said Jeff McMillan, who leads data analytics and wealth management at the firm. The tool does not know about individual clients and any human touch that might be needed, like if they are going through a divorce or illness.

Other Workplaces

Aquent Talent, a staffing firm, is using a business version of Bard. Usually, humans read through workers’ resumes and portfolios to find a match for a job opening; the tool can do it much more efficiently. Its work still requires a human audit, though, especially in hiring, because human biases are built in, said Rohshann Pilla, president of Aquent Talent.

Harvey, which is funded by OpenAI, is a startup selling a tool like this to law firms. Senior partners use it for strategy, like coming up with 10 questions to ask in a deposition or summarizing how the firm has negotiated similar agreements.

“It’s not, ‘Here’s the advice I’d give a client,’” said Winston Weinberg, a co-founder of Harvey. “It’s, ‘How can I filter this information quickly so I can reach the advice level?’ You still need the decision-maker.”

He said it’s especially helpful for paralegals or associates. They use it to learn — asking questions like: What is this type of contract for, and why was it written like this? — or to write first drafts, like summarizing a financial statement.

“Now all of a sudden, they have an assistant,” he said. “People will be able to do work that’s at a higher level faster in their career.”

Other people studying how workplaces use large language models have found a similar pattern: They help junior employees most. A study of customer support agents by Brynjolfsson and colleagues found that using AI increased productivity 14% overall and 35% for the lowest-skilled workers, who moved up the learning curve faster with its assistance.

“It closes gaps between entry-level workers and superstars,” said Robert Seamans of New York University’s Stern School of Business, who co-wrote a paper finding that the occupations most exposed to large language models were telemarketers and certain teachers.

The last round of automation, affecting manufacturing jobs, increased income inequality by depriving workers without college educations of high-paying jobs, research has shown.

AI could perhaps do this again — for example, if senior managers called on large language models to do the work of junior staffers, potentially increasing the earnings of executives while displacing the jobs of those with less experience. But some scholars say large language models could do the opposite — decreasing inequality between the highest-paid workers and everyone else.

“My hope is it will actually allow people with less formal education to do more things,” said David Autor, a labor economist at MIT, “by lowering barriers to entry for more elite jobs that are well paid.”

c.2023 The New York Times Company. This article originally appeared in The New York Times.